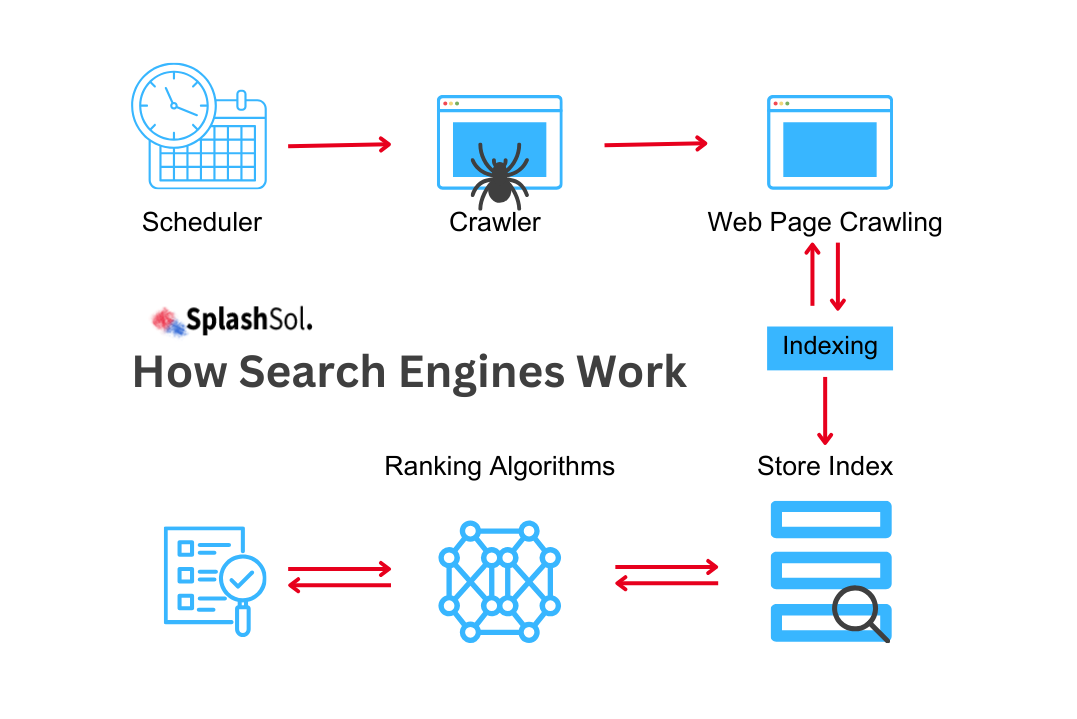

As of April 2023, Google indexed over 25 billion web pages This number constantly changes, with billions of pages being added and removed daily.

In the competitive field of Internet and digital marketing, indexing your website pages by search engines is crucial for online visibility and success.

However, many website owners often find themselves grappling with the perplexing issue of pages not getting indexed.

In this comprehensive blog, we will discuss the 15 common reasons behind this dilemma and explore solutions to ensure your web pages are not left in the dark corners of the internet.

| Problem |

Description |

Solution |

| Robots.txt Issues |

The robots.txt file, often overlooked, plays a pivotal role in instructing search engine bots on which pages to crawl and index. However, misconfigurations in this file can inadvertently block access to essential pages, impeding the indexing process. |

A thorough examination of the robots.txt file is necessary. Ensure that no unintentional restrictions are placed on critical pages, allowing search engine bots to navigate and index seamlessly. |

| Noindex Meta Tag |

The subtle but powerful “noindex” meta tag can be a silent culprit. When applied unintentionally to pages, it signals search engines not to index them. While this is beneficial for certain pages like confirmation or thank-you pages, it becomes problematic when applied to vital content. |

Regularly audit your meta tags, removing the “noindex” directive from pages where indexing is essential. This ensures that search engines recognise and index your valuable content. |

| Canonicalisation Issues |

Canonical tags are a valuable tool in guiding search engines to the preferred version of a page, particularly when similar content exists on multiple URLs. However, misuse or incorrect implementation of canonical tags can lead to confusion, hindering the efficient indexing of your pages. |

Review and ensure the correct application of canonical tags. They should accurately point to the preferred version of your pages, guiding search engines to index the right content. |

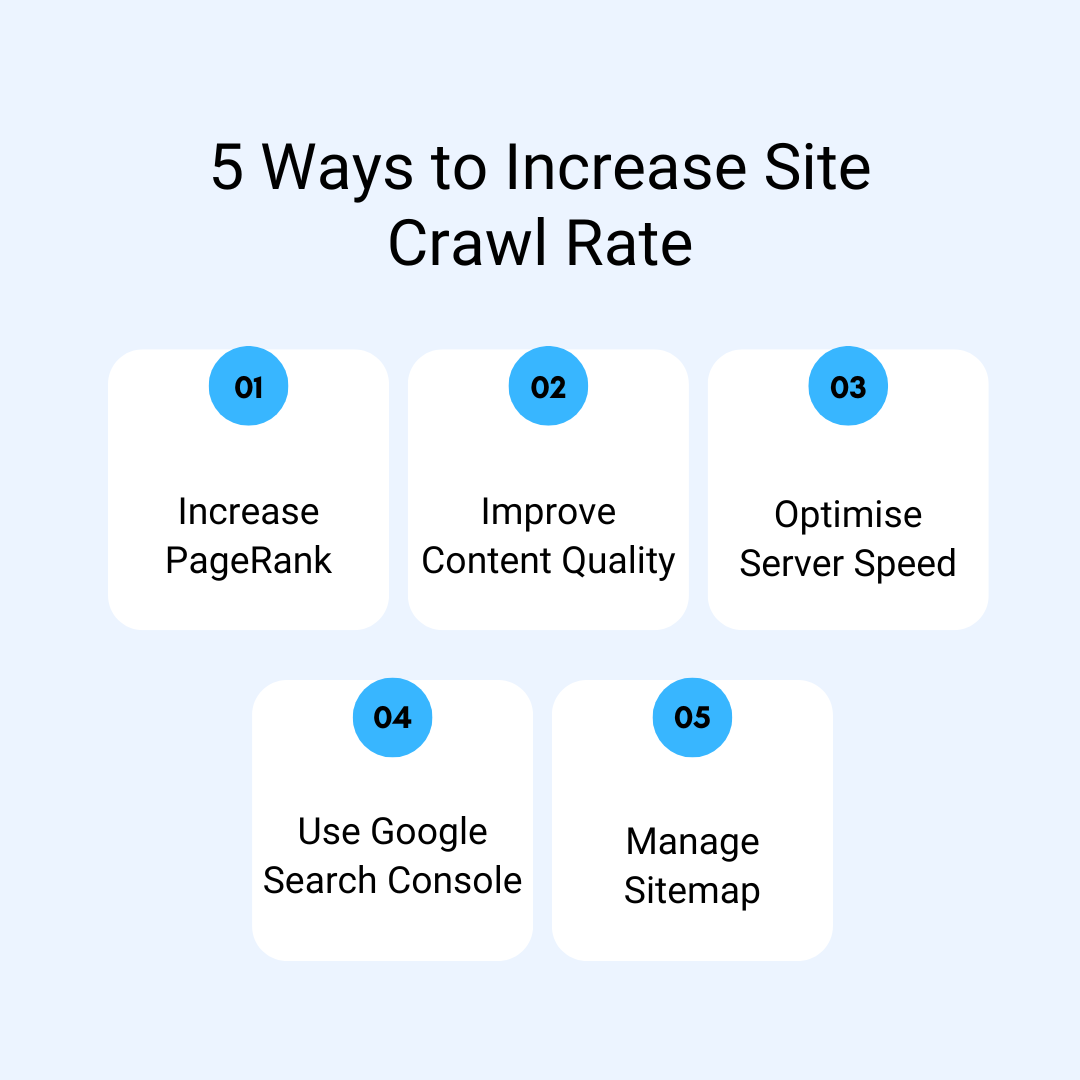

| XML Sitemap Errors |

The XML sitemap serves as a roadmap for search engines, helping them discover and index pages on your website. However, errors within the sitemap, such as broken links or outdated information, can be a stumbling block. |

Regularly update and review your XML sitemap. Ensure it accurately reflects your website’s structure and content, providing a clear and error-free path for search engine crawlers. |

| Page Loading Speed |

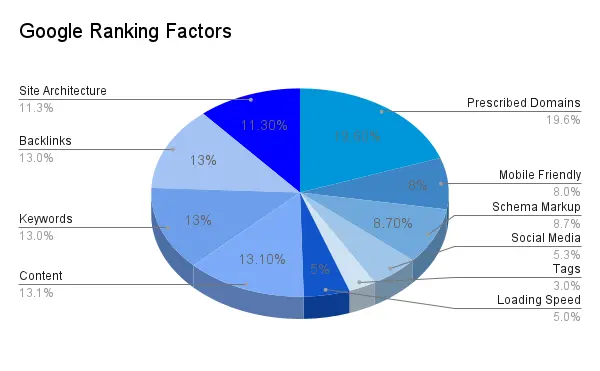

In instant gratification, page loading speed is not merely a user experience concern but a critical factor in search engine algorithms. Slow-loading pages can deter efficient crawling and indexing by search engine bots, impacting your website’s overall visibility. |

Optimise your website’s performance by compressing images, leveraging browser caching, and employing content delivery networks (CDNs). This ensures swift loading times, facilitating seamless indexing. |

| Mobile-Friendliness |

The shift towards mobile-first indexing underscores the importance of having a mobile-friendly website. Non-responsive or mobile-unfriendly pages can face challenges in efficient indexing, affecting overall search engine visibility. |

Ensure your website employs a responsive design that adapts seamlessly to various devices. Regularly test its mobile-friendliness using tools like Google’s Mobile-Friendly Test to guarantee optimal indexing. |

| Duplicate Content |

Search engines offer diverse and relevant results to users. Instances of duplicate content can trigger search engines to avoid indexing certain pages to prevent redundancy in search results. |

Conduct a thorough audit of your website to identify and rectify duplicate content. Utilise canonical tags to guide search engines in indexing the primary version of your content. |

| Server Issues |

The reliability and speed of your server play a crucial role in facilitating consistent access for search engine bots. Frequent server downtime or slow response times can disturb the crawling and indexing process. |

Regularly monitor your server’s performance, addressing any downtime promptly. Ensure fast response times to guarantee search engine bots can access and index your pages efficiently. |

| URL Structure Problems |

A well-structured URL not only helps user navigation but also assists search engines in understanding the hierarchy of your website. Convoluted URLs with unnecessary parameters can impede effective indexing. |

Optimise your URL structure for clarity and relevance. Utilise descriptive keywords and ensure a logical hierarchy that helps search engine bots understand the context of your pages. |

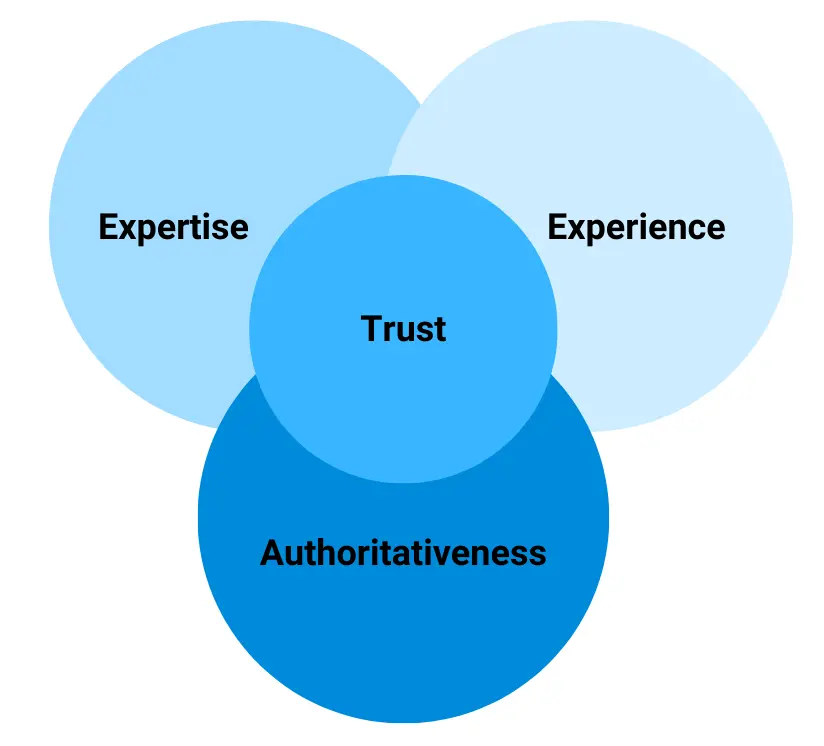

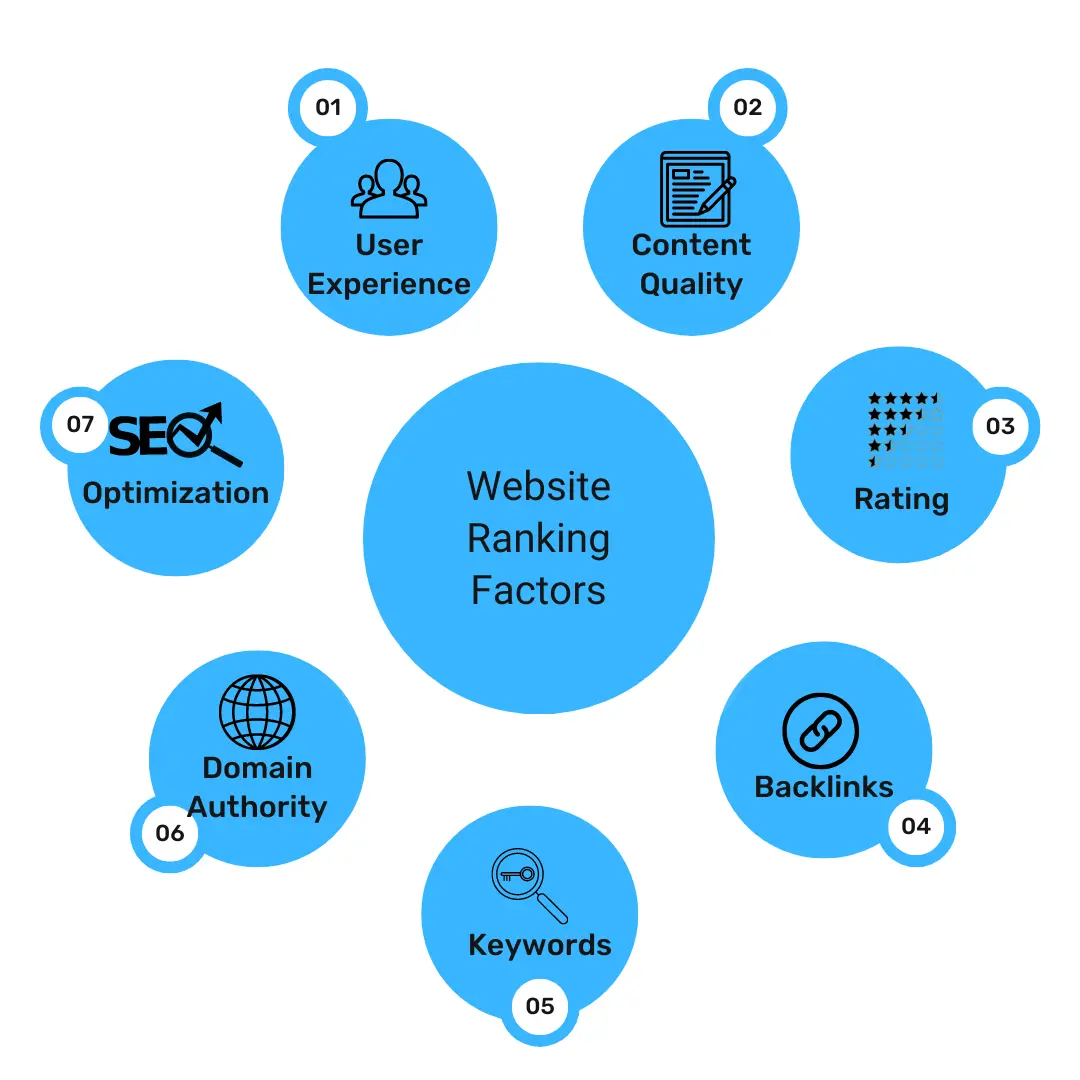

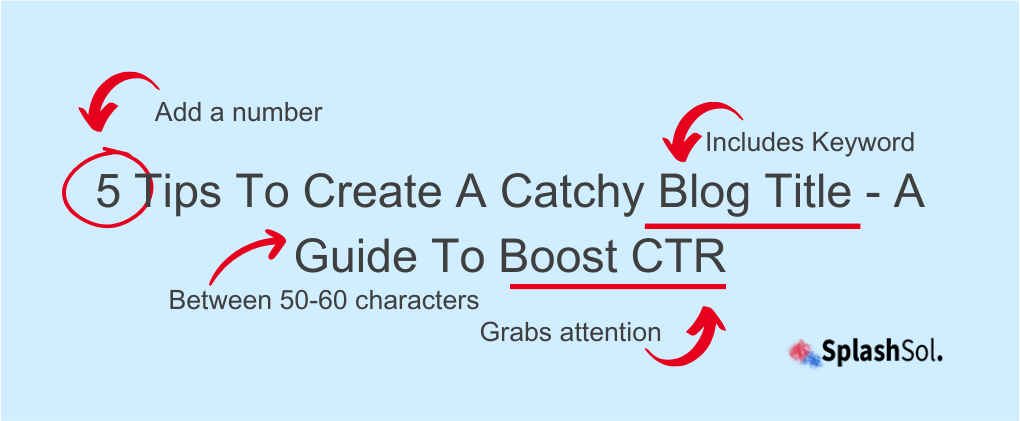

| Content Quality |

Content is the backbone of online visibility. Low-quality or thin content or blog titles with no catch may not meet the standards set by search engines, leading to pages being disregarded during the indexing process. |

Invest in creating high-quality, informative content that adds value to users. Align your content with search engine guidelines, ensuring it meets the criteria for effective indexing. |

| SSL Certificate Issues |

In an age where cybersecurity is important, search engines prioritise secure websites. Websites lacking a valid SSL certificate may face penalties, impacting both indexing and ranking. |

Implement an SSL certificate to ensure your website is secure. This not only enhances indexing but also builds trust with users, contributing to a positive online experience. |

| Broken Internal Links |

Internal links are the arteries of your website, guiding search engine bots through the various pages. Broken internal links create roadblocks for your content’s smooth navigation and indexing. |

Conduct regular audits to identify and fix broken internal links. Maintaining a healthy internal linking structure ensures search engine bots can seamlessly traverse your website. |

| Issues with JavaScript |

While search engines have become more adept at crawling JavaScript, complexities within this scripting language can still pose challenges. If critical content relies heavily on JavaScript, it may not be indexed effectively. |

Ensure important content is accessible without relying solely on JavaScript. Provide alternative methods for search engine bots to access and understand your content, ensuring comprehensive indexing. |

| Pagination Problems |

For websites with extensive content organised through pagination, clear signals are essential for search engines to understand the structure. Improper implementation or lack of pagination signals can lead to indexing issues. |

Implement rel=”next” and rel=”prev” tags to indicate the pagination structure. This not only helps search engines understand the organisation of your content but also ensures comprehensive indexing. |

| Manual Actions Or Penalties |

In cases where a website violates search engine guidelines, manual actions or penalties may be imposed. This can result in specific pages not being indexed as a consequence of non-compliance. |

Regularly review and adhere to search engine guidelines to avoid penalties. In the event of a penalty, take corrective actions promptly and submit a reconsideration request to restore normal indexing. |

Conclusion

It is important to comprehend the reasons behind your website pages’ non-indexing in the complicated context of online exposure.

Addressing these common issues is not merely a checklist but a strategic roadmap to ensure your pages are not left undiscovered.

Regular monitoring and optimisation, guided by these solutions, will not only improve indexing but will also contribute to a user-friendly and search engine-friendly online environment.

As you navigate the dynamic field of online presence, the search for effective indexing becomes an ongoing journey, ensuring your pages are not only crawled but prominently featured in the search engine results that define your digital success.

FAQs

Why aren’t my website pages getting indexed?

Several factors could be contributing to this issue, including misconfigurations in your robots.txt file, the presence of a “noindex” meta tag, or problems with your XML sitemap. Identifying and rectifying these issues is crucial for successful indexing.

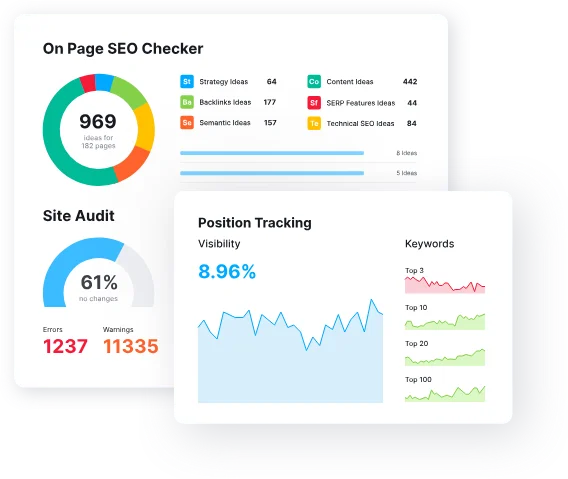

How can I check if a specific page is indexed by search engines?

You can use the “site:” operator in Google search (e.g., site:yourwebsite.com/page) to see if a particular page is indexed.

Additionally, various online tools and search engine consoles provide insights into the indexing status of your pages.

What role does page loading speed play in indexing?

Page loading speed directly influences the efficiency of search engine bots in crawling and indexing your pages. Slow-loading pages can lead to incomplete indexing or even non-indexing.

Optimising your website’s performance is essential for successful indexing.

Are duplicate content issues affecting my page indexing?

Yes, duplicate content can be a significant hindrance to indexing. Search engines aim to provide diverse and relevant results to users, so having duplicate content across your site can lead to certain pages not being indexed.

Use canonical tags and address duplicate content issues for better indexing.

How frequently should I update my XML sitemap?

Regular updates to your XML sitemap are crucial for accurate indexing. Any changes to your site’s structure, new content additions, or removal of outdated pages should prompt an update to your XML sitemap.

Keeping it current ensures that search engines can efficiently discover and index your latest content.